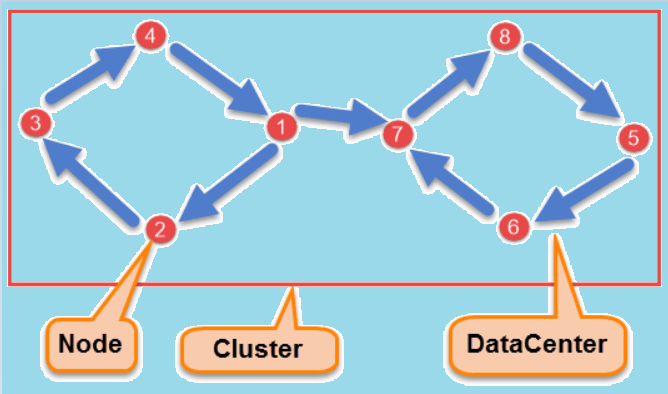

Cassandra can handle big data workloads with data being distributed among all the nodes in a cluster. These workloads can be handled across multiple nodes with a peer-to-peer distributed system across its nodes. Cassandra does not allow even a single point of failure. Each node in Cassandra performs the same functions and is interconnected to other nodes but still is independent. In a cluster the data can be located anywhere but still each node of the cluster accepts the read and write requests and in the case of failure the other nodes in the network serves the purpose.

Data Replication in Cassandra:

Cassandra is fault-tolerant. With four nodes in a cluster, Cassandra becomes fault-tolerant as each node has a copy of the same data. If any of the four nodes is not working then the purpose can still be served by the other three nodes on request. Even if any node has an out-of-date value, Cassandra still returns the most recent value to the client. To update the stale values, it also performs a read repair in the background.

Main Components of Cassandra:

Node:

It is used to store data.

Data center:

It acts as a collection of related nodes.

Cluster:

It contains one or more data centers.

Commit log:

It is a crash-recovery mechanism which is used for writing operation.

Mem-table:

It is a memory-resident data structure, in which the data is written after the commit log.

SSTable:

The data is flushed into this disk file from the mem-table. It is used when the contents of the mem-table reaches a threshold value.

Bloom filter:

It is a special type of cache which is used to check if an element is a member of a set. They are quick and nondeterministic algorithms that are accessed after every query.

Cassandra Query Language (CQL):

To access Cassandra through its nodes the CQL is used. In the Cassandra Query Language, the database or Keyspace is treated as a container of tables. The cqlsh is a prompt to work with CQL. It is also used for separate application language drivers. Any of the nodes can be approached by the client for any read-write operations. The approached node thus serves as a coordinator and as a proxy between the client and the data-holding nodes.

Write Operations:

In the Cassandra Write operations, the commit logs written in the nodes captures each write activity of nodes. The data is then stored and written to the mem-table after the commit log. When the contents of the mem-table reaches a threshold value, the data is flushed into the SStable disk file from the mem-table. Partition and replication of data is automatic throughout the cluster. The unnecessary data are also discarded as Cassandra periodically consolidates the SSTables.

Read Operations:

In the Cassandra Read operations, the first purpose is to find the appropriate SSTable which contains the required data. The values from the mem-table are used to check the bloom filter for this purpose. The Read requests are sent to replicas by coordinators in three forms:

- The Direct request.

- The Digest request.

- The Read repair request.

The Direct request is sent by the coordinator to one of the replicas. The Digest request is than sent by the coordinator to the number of replicas specified by the consistency level. A check is then done to check if the returned data is updated or not. The Digest request is than sent by the coordinator to all the remaining replicas. The Read repair request is used for a read repair mechanism to update a data when a node gives out of date value.