[ad_1]

Most economical provider institutions function their main banking platform on legacy mainframe systems. The monolithic, proprietary, rigid architecture produces many troubles for innovation and expense-effectiveness. This blog put up explores an open, elastic, and scalable answer driven by Apache Kafka that can appear to resolve these challenges. A few cloud-native real-planet banking solutions demonstrate how transactional and analytical workloads can be crafted at any scale in real-time for conventional enterprise processes like payments or regulatory reporting and innovative new apps like crypto buying and selling.

What Is Core Banking?

Core banking is a banking assistance furnished by networked lender branches. Customers could access their bank accounts and complete standard transactions from member branch workplaces or linked software package purposes like cell applications. Core banking is often linked with retail banking, and several banks treat retail buyers as their main banking buyers. On the other hand, wholesale banking is a small business performed amongst banks. For illustration, securities trading entails purchasing and promoting shares, shares, and so forth.

Organizations are typically managed by using the corporate banking division of the institution. Main banking addresses the basic depositing and lending of income. Standard main banking capabilities consist of transaction accounts, financial loans, home loans, and payments.

Usual business procedures of the banking running system contain KYC (“Know Your Shopper”), merchandise opening, credit score scoring, fraud, refunds, collections, etcetera.

Banking companies make these providers out there throughout various channels like automated teller machines, Net banking, mobile banking, and branches. In addition, banking software program and network know-how enable a lender to centralize its report-keeping and make it possible for entry from any area.

Automatic analytics for regulatory reporting, versatile configuration to regulate workflows and innovate, and an open up API to combine with 3rd occasion ecosystems are vital for present day banking platforms.

Transactional vs. Analytical Workloads in Core Banking

Workloads for analytics and transactions have incredibly in contrast to attributes and needs. The use circumstances differ appreciably. Most main banking workflows are transactional.

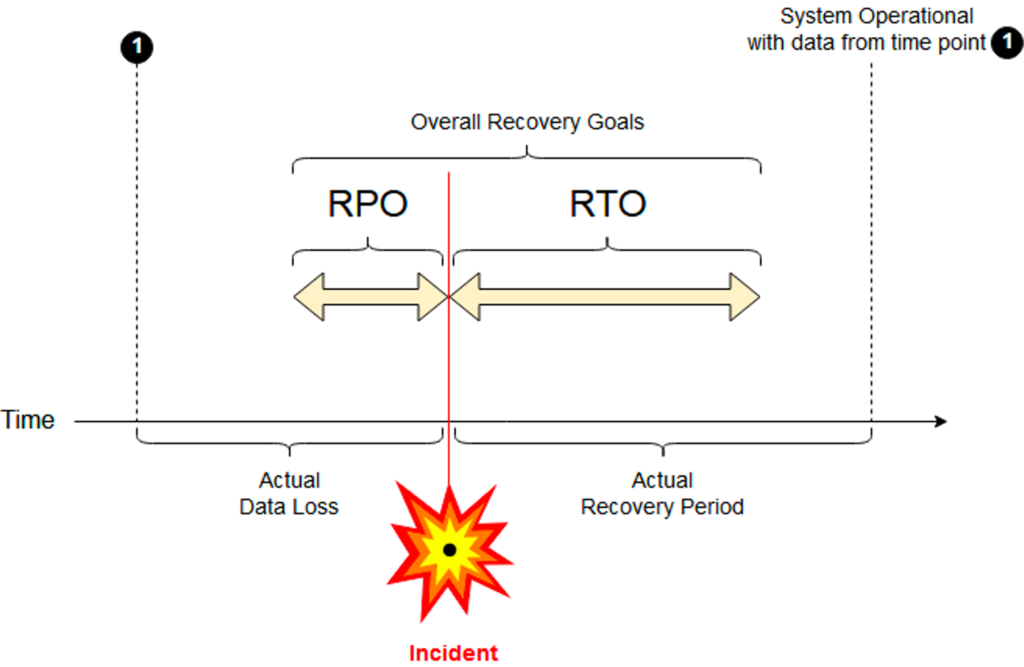

SLAs are incredibly distinct and are very important to comprehension to warranty the good behavior in situation of infrastructure problems and for disaster recovery:

- RPO (Recovery Place Objective): The real knowledge loss you can live within the circumstance of a disaster

- RTO (Recovery Time Aim): The real restoration period (i.e., downtime) you can reside inside the situation of a catastrophe

When downtime or information decline are not excellent in analytics use conditions, they are typically appropriate when the charge and risk are in contrast for guaranteeing an RTO and RPO shut to zero. That’s why, if the reporting purpose for stop-customers is down for an hour or even even worse a few experiences are dropped, then lifestyle goes on.

In transactional workloads in just a main banking platform, RTO and RPO have to have to be as shut to zero as attainable, even in the circumstance of a catastrophe (e.g., if a finish knowledge heart or cloud location is down). If the main banking system loses payment transactions or other compliance processing situations, the lender is in huge problems.

Legacy Core Banking Platforms

Breakthroughs in the World wide web and information know-how lessened guide operate in banking institutions and amplified performance. Pc software package was formulated decades ago to execute core banking operations like recording transactions, passbook maintenance, curiosity calculations on financial loans and deposits, buyer documents, the balance of payments, and withdrawal.

Banking Computer software Working on a Mainframe

Most core banking platforms of classic financial expert services businesses even now operate on mainframes. The equipment, functioning systems, and applications even now do a wonderful career. SLAs like RPO and RTO are not new. If you glance at IBM’s mainframe items and docs, the main ideas are similar to chopping-edge cloud-indigenous systems. Downtime, information reduction, and comparable needs will need to be described.

The solving architecture furnished the necessary assures. IBM DB2, IMS, CICS, and Cobol code continue to run transactional workloads incredibly steady. A modern IBM z15 mainframe, introduced in 2019, provides up to 40TB RAM and 190 Main. ThatThat’sy remarkable.

Monolithic, Proprietary, Inflexible Mainframe Apps

So, what is actually a trouble with legacy core banking platforms managing on a mainframe or equivalent infrastructure?

- Monolithic: Legacy mainframe purposes are extraordinary monolithic applications. This is not equivalent to a monolithic internet application from the 2000s managing on IBM WebSphere or a further J2EE. / Java EE application server. It is a lot worse.

- Proprietary: IMS, CICS, MQ, DB2, et al. are extremely experienced systems. Having said that, upcoming-technology final decision makers, cloud architects, and developers hope open APIs, open up-supply core infrastructure, ideal-of-breed solutions, and SaaS with unbiased flexibility of alternative for each problem.

- Rigid: Most legacy main banking programs do their occupation for many years. The Cobol code operates. Even so, it is not understood or documented. Cobol builders are scarce, way too. The only solution is to increase existing purposes. Also, the infrastructure is not elastic to scale up and down in a software package-defined fashion. As an alternative, companies have to acquire components for thousands and thousands of pounds (and nevertheless pay out an supplemental fortune for the transactions).

Sure, the mainframe supports up-to-date systems such as DB2, MQ, WebSphere, Java, Linux, World-wide-web Products and services, Kubernetes, Ansible, and Blockchain! However, this does not clear up the existing difficulties. This only aids when you construct new purposes. Nevertheless, new apps are generally produced with fashionable cloud-native infrastructure and frameworks as a substitute of relying on legacy principles.

Optimization and Value Reduction of Existing Mainframe Applications

The higher than sections looked at the organization architecture with RPO/RTO to promise uptime and no data reduction. This is crucial for selection-makers dependable for the business device risk and earnings.

However, the 3rd component other than profits and hazard is value. Over and above furnishing an elastic and flexible infrastructure for the up coming-technology core banking system, firms also go absent from legacy alternatives for charge factors.

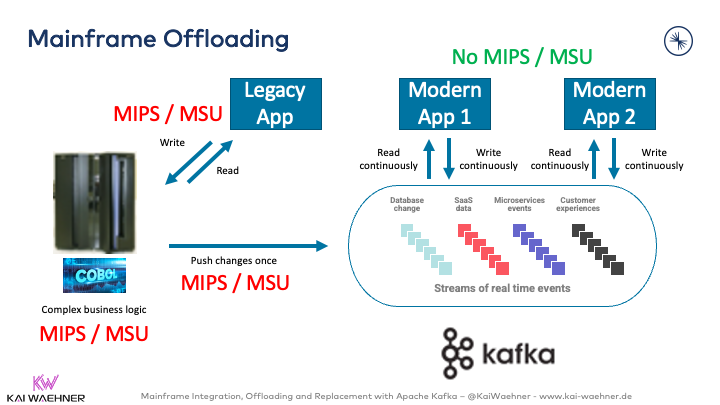

Enterprises preserve thousands and thousands of bucks by just offloading facts from a mainframe to modern day celebration streaming:

For occasion, streaming info empowers the Royal Financial institution of Canada (RBC) to save millions of pounds by offloading facts from the mainframe to Kafka. Below is a quote from RBC:

“… rescue knowledge off of the mainframe, in a cloud-native, microservice-based mostly vogue … [to] … appreciably lessen the reads on the mainframe, conserving RBC set infrastructure charges (OPEX). RBC stayed compliant with bank restrictions and enterprise logic and can now make new programs using the similar celebration-based mostly architecture.“

Present day Cloud-Indigenous Main Banking Platforms

This publish is not just about offloading and integration. In its place, we seem at serious-environment examples exactly where cloud-native main banking replaced present legacy mainframes or enabled new FinTech firms to commence in a cutting-edge genuine-time cloud surroundings from scratch to compete with the regular FinServ contenders.

Needs for a Present day Banking System?

Below are the demands I below consistently on the desire checklist of executives and guide architects from money products and services companies for new banking infrastructure and apps:

- True-time data processing

- Scalable infrastructure

- Superior availability

- Correct decoupling and backpressure handling

- Price-economical value design

- Adaptable architecture for agile enhancement

- Elastic scalability

- Standards-primarily based interfaces and open APIs

- Extensible capabilities and area-driven separation of worries

- Protected authentication, authorization, encryption, and audit logging

- Infrastructure-independent deployments across an edge, hybrid, and multi-area / multi-cloud environments

What Are Cloud-Indigenous Infrastructure and Programs?

And in this article are the abilities of a truly cloud-indigenous infrastructure to construct a subsequent-generation main banking technique:

- True-time knowledge processing

- Scalable infrastructure

- Substantial availability

- Accurate decoupling and backpressure dealing with

- Cost-productive cost model

- Adaptable architecture for agile advancement

- Elastic scalability

- Criteria-primarily based interfaces and open APIs

- Extensible features and area-pushed separation of considerations

- Secure authentication, authorization, encryption, and audit logging

- Infrastructure-unbiased deployments throughout an edge, hybrid, and multi-location / multi-cloud environments

I think you get my point right here: Adopting cloud-native infrastructure is vital for success in creating next-technology banking software program. No subject if you

- are on-premise or in the cloud

- are a classic participant or a startup

- concentration on a particular region or language, or work across regions or even globally

Apache Kafka = Cloud-Native Infrastructure for Authentic-Time Transactional Workloads

Lots of individuals consider that Apache Kafka is not designed for transactions and must only be employed for massive information analytics. This weblog submit explores when and how to use Kafka in resilient, mission-critical architectures and when to use the created-in Transaction API.

Kafka is a distributed, fault-tolerant procedure that is resilient by mother nature (if you deploy and function it accurately). No downtime and facts loss can be confirmed, like in your favorite database, mainframe, or other core platforms.

Elastic scalability and rolling updates let a versatile and reliable knowledge streaming infrastructure for transactional workloads to assurance business continuity. The architect can even extend a cluster across regions with tools. This setup makes certain zero info loss and zero downtime even in case of a disaster where a data heart is completely down.

The publish “Worldwide Kafka Deployments” explores the distinctive deployment options and their trade-offs in a lot more detail.

Apache Kafka in Banking and Economical Services

The increase of occasion streaming in money solutions is increasing like ridiculous. Ongoing actual-time facts integration and processing are required for numerous use scenarios. Many organization departments in the fiscal companies sector deploy Apache Kafka for mission-significant transactional workloads and massive data analytics, together with core banking. Large scalability, significant trustworthiness, and an elastic open up infrastructure are the crucial motives for Kafka’scess.

To learn a lot more about different use conditions, architectures, and genuine-globe examples in the FinServ sector, look at out the article “Apache Kafka in the Money Solutions Industry.” Use cases incorporate.

- Prosperity administration and capital marketplaces

- Marketplace and credit hazard

- Cybersecurity

- IT Modernization

- Retail and corp banking

- Customer knowledge

Modern-day cloud-indigenous main banking methods driven by Kafka

Now, let us take a look at the precise illustration of cloud-indigenous core banking answers crafted with Apache Kafka. The subsequent subsections clearly show three actual-world examples.

Thought Equipment – Correctness and Scale in a Single System

Imagined Equipment is an ground breaking and flexible main banking functioning procedure. The main abilities of Believed Machine’s alternative include:

- Cloud-indigenous core banking computer software

- Transactional workloads (24/7, zero facts decline)

- Versatile solution engine powered by intelligent contracts (not blockchain)

The cloud-indigenous core banking operating process allows a financial institution to attain a wide scale of customization devoid of modifying something on the underlying system. This is hugely beneficial and a essential part of how its architecture is a counterweight to the “spaghetti” that occurs in other units when customization and system functionality are not separated.

Assumed MachMachine’ska Summit chat from 2021 explores how Believed Machine’s banking system ‘Vault’ was built in a cloud-very first fashion with Apache Kafka at its heart. It leverages function streaming to help asynchronous and parallel processing at scale, exclusively focusing on the architectural designs to guarantee ‘correctness’ in these kinds of an atmosphere.

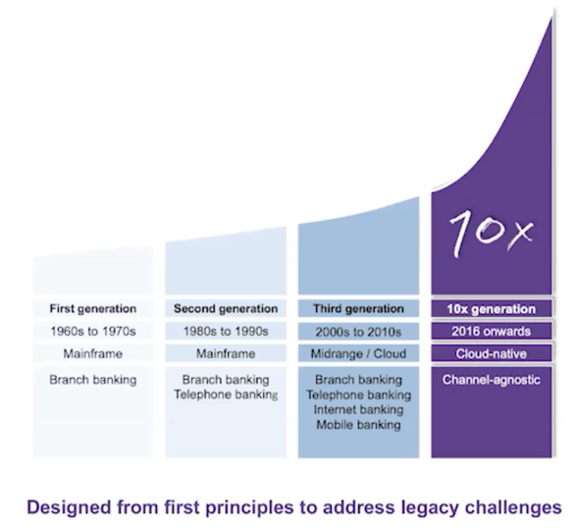

10x Banking – Channel Agnostic Transactions in Real-Time

10X Banking delivers a cloud-indigenous main banking platform. Their Kafka Summit talk talked about the historical past of core banking and how they leverage Apache Kafka in conjunction with other open up-resource systems within their professional system.

10x cloud-indigenous approach delivers flexible merchandise lifecycles. Time-to-market is a vital advantage. Organizations do not need to start from scratch. A unified data model and tooling permitted aim on the business problems.

10x platform is a protected, dependable, scalable, and regulatory compliant SaaS platform that minimizes the regulatory burden and expense. It is developed on a area-driven style with accurate decoupling between transactional workloads and analytics/reporting.

Kafka is a facts hub inside of the comprehensive platform for true-time analytics, transactions, and cybersecurity. Nevertheless, Apache Kafka is not the silver bullet for every single trouble. Therefore, 10x chose a ideal-of-breed tactic to merge distinct open up-supply frameworks, industrial products and solutions, and SaaS choices to establish the cloud-native banking framework.

Custodigit – Secure Crypto Investments With Stateful Info Streaming and Orchestration

Custodigit is a modern banking system for electronic property and cryptocurrencies. It supplies critical functions and ensures for critically regulated crypto investments:

- Safe storage of wallets

- Sending and receiving on the blockchain

- Trading by using brokers and exchanges

- Controlled natural environment (a critical aspect and no surprise as this products is coming from the Swiss – a pretty controlled industry)

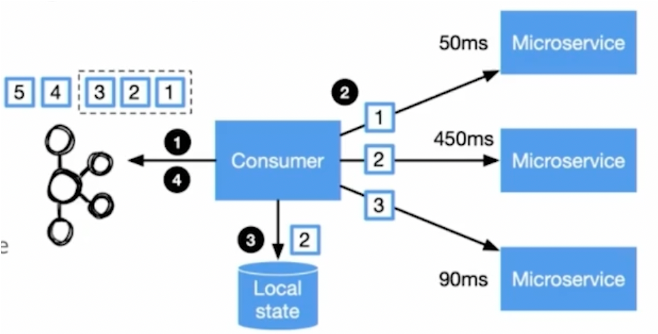

Kafka is the central main banking anxious system of Custodigit’s microservice architecture. Stateful Kafka Streams programs present workflow orchestration with the “distributed saga” layout sample for the choreography in between microservices. Kafka Streams was selected because of:

- lean, decoupled microservices

- metadata management in Kafka

- unified data composition across microservices

- transaction API (aka particularly-once semantics)

- scalability and trustworthiness

- genuine-time processing at scale

- a higher-level area-distinct language for stream processing

- extensive-operating stateful procedures

Cloud-Native Core Banking Offers Elastic Scale for Transactional Workloads

Contemporary main banking software package needs to be elastic, scalable, and actual-time. This is true for transactional workloads like KYC or credit history scoring and analytical workloads like regulatory reporting. Apache Kafka allows processing transactional and analytical workloads in quite a few fashionable banking methods.

Imagined Equipment, 10X Banking, and Custodigit are three cloud-native illustrations driven by the Apache Kafka ecosystem to allow the upcoming generation of banking software package in genuine-time. Open up Banking is attained with open up APIs to combine with other 3rd social gathering products and services.

The integration, offloading, and afterwards substitution of legacy technologies such as mainframes with modern-day data streaming systems confirm the small business price in quite a few businesses. Kafka is not a silver bullet but the central and mission-crucial details hub for actual-time info integration and processing.

[ad_2]